API Rate Limits

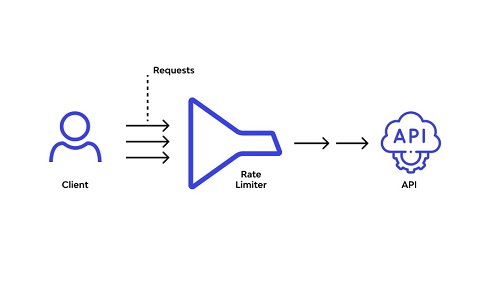

API rate limits are used to prevent overuse of an API, which can have negative consequences for the API provider and for users of the API. Here are a few reasons why API rate limits are necessary for public APIs:

To protect against abuse and malicious activity: Without rate limits, an API can be vulnerable to abuse by malicious users who may send a large number of requests in an attempt to overwhelm the API or extract sensitive data. Rate limits help to mitigate this risk by limiting the number of requests that can be made within a certain time period.

To prevent resource exhaustion: API servers have limited resources such as CPU, memory, and network bandwidth. Without rate limits, an API can be overwhelmed by a sudden spike in traffic, leading to poor performance or even downtime. Rate limits help to prevent resource exhaustion by limiting the number of requests that can be made at any given time.

To ensure fair usage: Public APIs may have many different users, and it is important to ensure that all users have fair access to the API. Without rate limits, a single user or application could make a large number of requests and use up a disproportionate amount of resources, potentially causing problems for other users. Rate limits help to ensure that all users can access the API fairly by limiting the number of requests that can be made by any single user or application.

Overall, API rate limits are important for maintaining the stability, reliability, and fairness of public APIs.

To implement an API rate limit using Redis for a distributed system, you can follow these steps:

- Set up a Redis server and install the Redis client library for your programming language.

- For each API endpoint that you want to rate limit, define the maximum number of requests that can be made within a certain time period (e.g. 100 requests per minute).

- When a request is received for the API endpoint, use the Redis client to increment a key in the Redis store that represents the number of requests made for that endpoint.

- Before processing the request, check the value of the key. If it is greater than the defined rate limit, return an error response (e.g. “Too Many Requests”) and do not process the request. If it is within the rate limit, process the request and return the response as usual.

- Set up a background job to periodically reset the request count for each endpoint (e.g. every minute). This can be done using a Redis script that resets all the keys to zero.

Example in Kotlin

import redis.clients.jedis.Jedis

import java.time.Duration

import java.time.Instant

// Set up the Redis client

val jedis = Jedis("localhost", 6379)

// Define the rate limit (e.g. 100 requests per minute)

val RATE_LIMIT = 100

val INTERVAL = Duration.ofMinutes(1)

fun processRequest(request: Request): Pair<String, Int> {

// Get the current time

val now = Instant.now()

// Check if the rate limit has been exceeded

val key = "request_count:${request.endpoint}:${now.toEpochMilli() / INTERVAL.toMillis()}"

val currentCount = jedis.incr(key).toInt()

if (currentCount > RATE_LIMIT) {

return "Too Many Requests" to 429

}

// Process the request

// ...

return "OK" to 200

}

fun resetRequestCounts() {

// Reset the request counts for all endpoints

val keys = jedis.keys("request_count:*")

for (key in keys) {

jedis.set(key, "0")

}

}

// Set up a background job to reset the request counts every minute

while (true) {

resetRequestCounts()

Thread.sleep(INTERVAL.toMillis())

}

This approach has the advantage of being simple to implement and relatively efficient, as it only requires a single Redis command (INCR) to check the rate limit and a single command (SET) to reset the request count. However, it may not be as accurate as other approaches, as it relies on a background job to reset the request counts, which may not always run exactly on time. This example uses the jedis library to connect to the Redis server and perform Redis commands. The processRequest returns a Pair of the response body and status code. The resetRequestCounts function uses the keys command to find all keys that match the pattern “request_count:*”, and sets their values to zero using the set command. The background job to reset the request counts runs in an infinite loop, sleeping for the duration of the interval between each iteration.